Having presented a poster at the 2017 OptaPro Analytics Forum, Martin Eastwood provides a written analysis of his work, discussing the processes underpinning it, the approach taken, challenges along the way and how it was received by the football analytics industry.

Follow Martin on Twitter: @penaltyblog

Introduction

It’s the 67th minute and Crystal Palace are losing 1-0 at home to AFC Bournemouth. Andros Townsend has the ball outside the box and elects to shoot despite having team mates closer to goal that he could pass to. The ball flies into the goalkeeper’s hands and possession is lost.

How do we evaluate the decisions footballers make during matches?

This was the question I asked myself for this year’s OptaPro Analytics Forum and the solution I settled on was to use machine learning. For those of you that haven’t come across machine learning before, it’s a form of artificial intelligence that can give computers the ability to learn without being explicitly programmed. Machine learning is how Google’s self-driving cars know where to go and how Facebook automatically recognises your friends’ faces in your photographs.

The second part of the task was how to make these insights accessible to football teams. Machine learning is a quite a hefty subject involving plenty of complex mathematics so how could I take this idea and present it to a football club in a way that was relevant to them?

The data

I started off the work with grand visions of solving football. Google recently used a machine learning technique known as Deep Learning to beat the world champion of the board game Go and I wanted to apply the same concepts here. However, with only six weeks to complete all the analyses and put a presentation together I reigned in my ambitions to a more realistic level and decided to focus purely on assessing teams’ attacking actions in and around the penalty box.

To do this, I applied for both Opta on-ball event data and ChyronHego tracking data as part of my proposal. Opta data gives a set of on-ball events that occur during the match whilst the optical tracking data gives you XY co-ordinates for all the players on pitch 25 times per second.

Identifying good decisions

Since the aim was to evaluate footballers’ decisions, I needed a way of determining what were good and bad decisions. After exploring a few options, I settled on looking at whether players’ actions were increasing their team’s overall likelihood of scoring.

To calculate this likelihood of scoring, I created a neural network based on the location of the player shooting at goal and the locations of all opposition players. I then checked the accuracy of how well the neural network predicted goals scored by testing it on a set of shots the network had never seen before.

Unfortunately, the results were somewhat underwhelming. Neural network can require a lot of data to train and with only a limited set of matches to build the model from there just wasn’t enough data for the network to fully converge. I tried simplifying the model by only including the locations of the opposition defenders and goalkeeper but this didn’t really improve things much.

I needed to give the network a bit of a helping hand at identifying the relevant information in the data so I added in a number of extra features, including players’ Voronoi tessellations, rather than relying on just the raw XY coordinates.

Voronoi tessellations are shapes drawn around each player marking the area that is closer to the player than to any other player (see the example in Figure 1 below).

The larger the area of a player’s Voronoi then the greater than amount of space they have around them and the less pressure they’re presumably under from the opposition. This additional feature engineering worked wonders and the neural network’s accuracy improved considerably.

Interpretability

Although neural networks are great at many things, one of their downsides is that they are difficult to interpret as they are essentially black boxes. You feed your data into one end and get a result out the other but you don’t really know how or why the network has come up with the answer it has.

I wanted to be able to discuss the results with football teams and from experience it can take a leap of faith for people without a mathematical background to trust black box algorithms so I also created a simpler model based on a logistic regression.

Although the regression’s accuracy didn’t match that of the neural network, it created a set of coefficients that could be used to help explain each result. For example, if a player only had a 5% chance of scoring I could show a coach exactly how much of that of was due to the player’s location on the pitch, their angle towards the goal, how many defenders were around them, how small their Voronoi area was, and so on.

After leaving my computer churning through the data for a few days I was finally in the happy position of being able to show the impact of each event on a team’s likelihood of scoring and explain why each action was having a positive or negative effect.

Presenting the data

The main output I wanted to present was how players’ actions were affecting their team’s chance of scoring a goal, so to do this I created a web app that animated the tracking data in real time (see close of the article for further details).

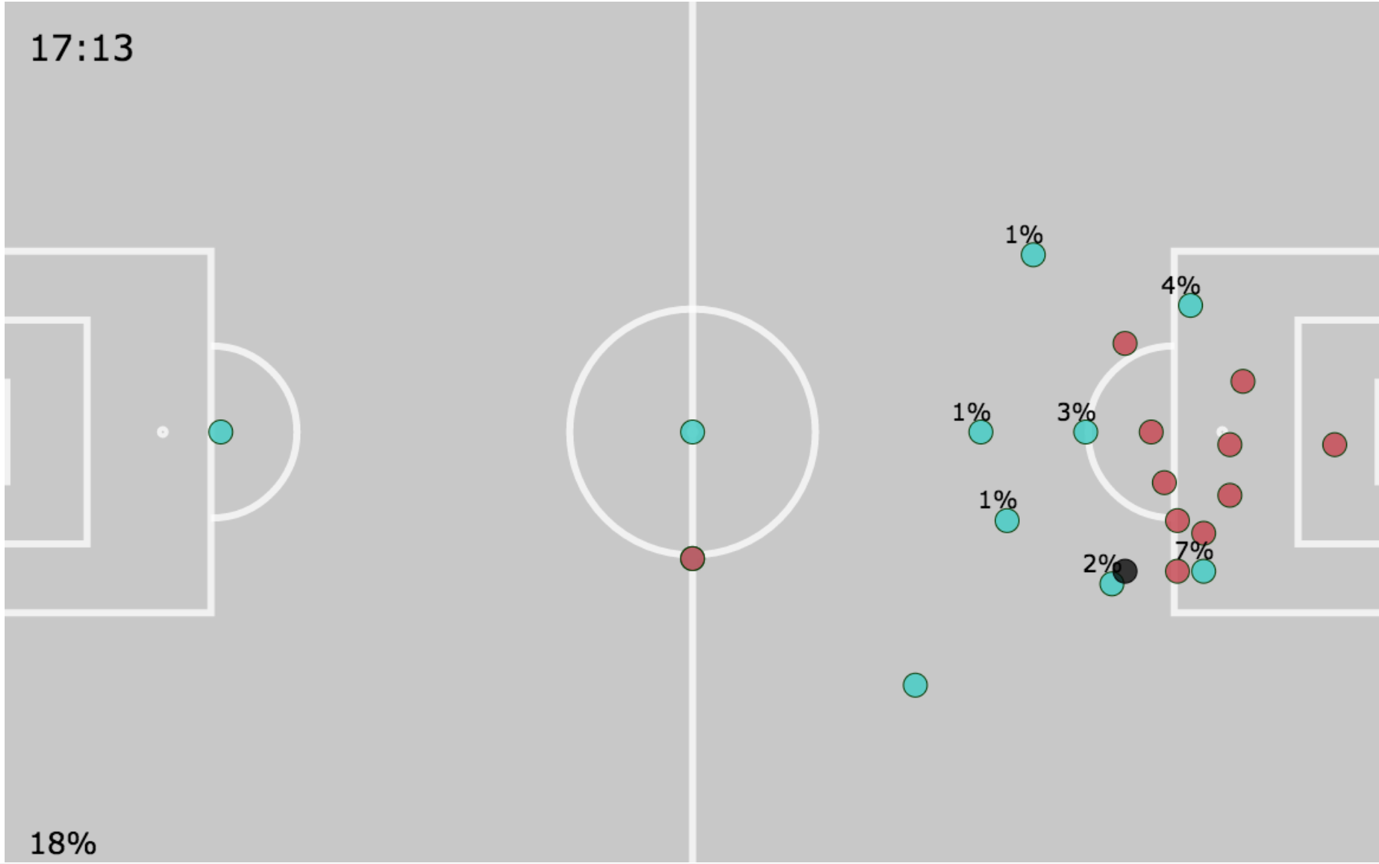

I then identified which team was attacking and overlaid the probability of the player with the ball scoring from his current location, as well as the probability of the player successfully passing to a team member and them shooting and scoring instead.

Figure 2 shows an example screenshot where the player with the ball only has a 2% chance of scoring if they were to shoot from their current location. The same player also has a 7% chance of successfully passing to a nearby team-mate and them shooting and scoring instead.

If you watch the video of this example back then the pass is clearly the better option but we get the added benefit here of being able to quantify exactly how much better that decision is in terms of scoring a goal.

You can then aggregate these decisions over a longer period to see how each player’s decisions are impacting their team.

The example above presumes that players are typically looking to shoot or pass directly to a team mate while attacking but this isn’t always the case. Often players are looking to move the ball into space for a team-mate to run on to. To account for this I added in the cumulative percentage chance of scoring, which is the value shown in the bottom left corner of Figure 2.

This metric combines all the individual player percentages into one number so you can see whether the team’s movement and shape is having a positive or negative impact on their overall likelihood of scoring. Using a cumulative percentage here isn’t strictly accurate as only one of those players actually gets to have a shot but I found it to be a useful proxy for a team’s overall goal threat and the concept seemed to go down really well with analysts I showed this to.

Feedback

I was fortunate enough to have the opportunity to discuss the work in detail with a number of coaches and analysts from professional teams and the feedback was overwhelmingly positive. The youth team coaches in particular thought the app would be a great way of teaching kids what to do in specific situations as they’d be able to watch the percentages change in real time as players make runs, cross the ball, etc.

In fact, the whole interactive aspect of the app seemed to go down really well. Rather than being a static graph or a spreadsheet of numbers, actually being able to watch the players running around and see how that that affected their team’s chance of scoring really seemed to engage with people and grab their attention. Throughout the course of the day there was a steady stream of people playing with the app and jumping through the footage to explore the effects of different types of events.

Next steps

I only had limited time to develop the app in time for the OptaPro Forum and there was a whole heap of ideas I didn’t get chance to implement. One area that was high up on my to-do list was to look at whether it could all be flipped to quantify defending rather than just attacking, for example by looking at how well defenders are doing at guiding attackers to less dangerous locations, whether they are breaking their defensive line and so on.

I was also keen to try overlaying the data on top of video too. The way the data is presented may need tweaking a bit but there’s the potential for even greater engagement when users can see players in the video rather than just the simple two-dimensional graphics I’ve drawn for the animations.

Finally…

I’ve put together a quick video showing the goal probabilities and some of the other overlays you can add to the tracking data here for anyone that wants to see the app in action.